Bachelor's Thesis Project

Astronomical Image Colorization and up-scaling using Conditional Generative Adversarial Networks

Introduction

Automated colorization of grayscale images is a burgeoning field in machine learning and computer vision with significant aesthetic and practical implications. It finds application in diverse areas, from reconstructing black and white photos to image enhancement and video restoration, improving interpretability. Super-resolution imaging aims to convert low-resolution images into high-resolution ones using a set of low-resolution images. This enhances visualization and recognition for various purposes, but it’s inherently limited by data loss during upscaling. Traditional methods rely on low-information smooth interpolation, while Generative Adversarial Networks (GANs) can hallucinate high-frequency data, though not always with desired clarity. This is particularly valuable for processing raw Hubble telescope images, which are often low-resolution and challenging to interpret due to noise and other factors. Streamlining image processing with automated colorization and super-resolution can significantly aid astronomers in analyzing vast datasets, which are continuously expanding with the introduction of new telescopes.

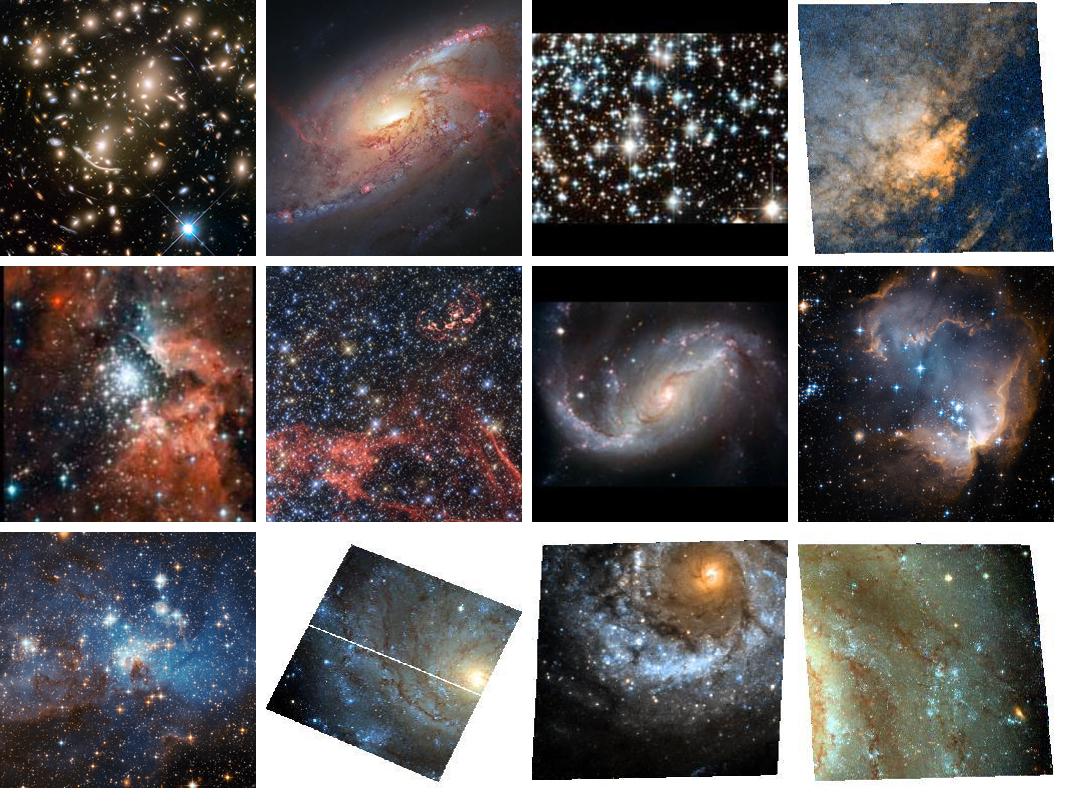

Dataset gathering

We initiated our project with data scraping from the Hubble Legacy Archive using a tool provided by Peh & Marshland (2019). The archive contained noisy, unprocessed images, which presented substantial challenges.

Filtering for M101 (Messier 101) galaxy images produced over 80,000 images, each differing by 1 degree in right ascension. The manual cleaning of this dataset to isolate high-resolution, well-colored images proved to be a time-consuming task.

To overcome this, we turned to the Hubble Heritage project, renowned for its high-quality astronomical images, from which we scraped approximately 150 valuable images. Additional images were collected from the main Hubble website, totaling around 1,000.

Due to computational limitations, we adopted 256 × 256 pixel dimensions with RGB channels. For the colorization task, we explored the utilization of the Lab color space, streamlining the process. We trained models in both color spaces and conducted a performance comparison.

Methodology

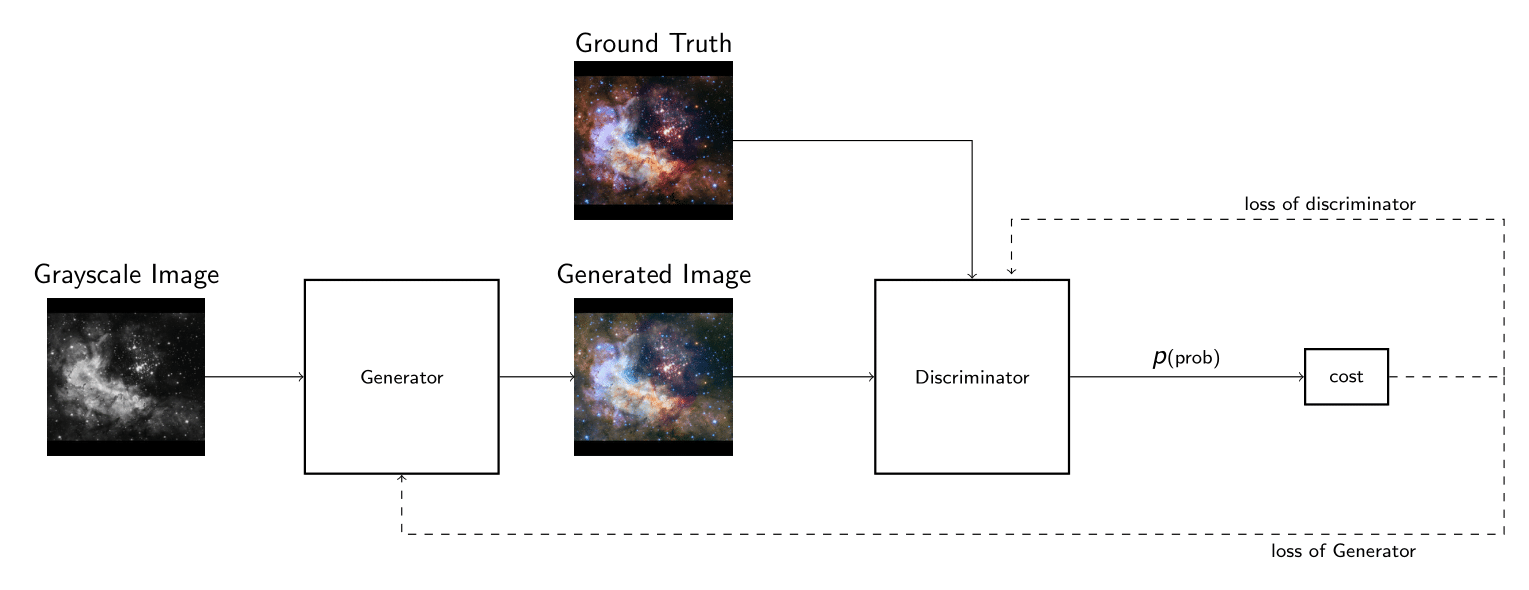

Isola et al. (2018) introduced a general solution for various image-to-image translation tasks. Our approach is inspired by this methodology with some modifications aimed at reducing the required training data and minimizing training time while achieving similar results.

We propose utilizing a U-net for the generator, with a pre-trained ResNet-18 network serving as the encoder. To address the challenge of “The blind leading the blind” in many image-to-image translation tasks, as identified by Ledig et al. (2017), we initially pre-train the generator independently using the Common Objects in Context (COCO) dataset and supervised learning with a mean absolute error loss function. However, this deterministic training still presents issues related to rectifying incorrect predictions.

To overcome this, we subject the pre-trained generator to adversarial training, further enhancing its generalization. We hypothesize that this adversarial retraining will help resolve subtle color differences.

The discriminator in our framework is based on a “Patch Discriminator” proposed by Isola et al. (2018), which produces a vector of probabilities for different localities in the input distribution, allowing us to pinpoint areas requiring correction in the generated image.

In the final phase, we fine-tune the trained generator and discriminator to adapt to our relatively small dataset. These networks are trained adversarially using the conditional GAN objective function (Isola et al., 2018) with the introduction of noise as the L1 norm of the generated image tensor compared to the target image tensor. This approach aims to train the generator with an adversarial component while minimizing the Manhattan distance between the generator’s output and the target vector space.

We also performed up-scaling using SR-GANs proposed by Ledig et al.(2017), you can find more information about this project in the linked publication.